Graeme Lewis

Chief Pipeline Officer

Exciting times ahead for Einstein Discovery and Snowflake!

If you’re part of a Salesforce enabled business, then you have some huge reasons to be excited about the possibilities of AI over the next six months and beyond. By combining the speed to operationalised value of Einstein Discovery (ED) predictive models with the incredible data-structure, compute power and service layer provided by Snowflake, accurate predictive insights can now be surfaced and shared faster than ever before.

“Accurate predictive insights can now be surfaced and shared faster than ever before.”

What are Snowflake and Einstein Discovery?

Snowflake is a proprietary cloud architecture for virtual data warehouses that was built with massive scalability in mind from the very start. Everything about Snowflake is structured to drive efficient storage, rapidly scalable compute power and the easy (but incredibly secure) sharing of data with any other Snowflake user on the planet.

Einstein Discovery is a core part of the Salesforce Einstein Analytics Plus platform and provides easy to use suite of tools for quickly building predictive models that can be deployed into a Salesforce org and efficiently operationalised at enterprise scale.

“Rapidly scalable compute and storage combined with efficient distribution at the enterprise level.”

How are Einstein Discovery predictive models typically built?

The Lightfold process for building and deploying an ED model usually follows these broad steps over 6-8 weeks:

1. Determine the base hypothesis and predictive goal.

2. Source, connect and prepare the necessary data to train the model.

3. Build the initial model and rapidly refine and iterate.

4. Deploy the operationalised model to a pilot user group.

5. Test the model.

6. Refine the model and deploy it for all relevant users.

7. Establish a program for monitoring and improving the model over time.

By far the single longest and least efficient part of the process is sourcing, connecting, and preparing the data.

Why does it take so long to source the data and get it prepped? Well, partly it’s the complexity involved in the transformations and logic needed to structure data in a way that makes sense for a particular predictive model, but mostly it’s because usually only half the relevant data is stored in the CRM. The rest of the data is stored in silos all over the business. Negotiating access to data can take weeks, and that is only the start. Next you need to convince one or more DBAs / product admins to coordinate with your Analytics Studio admin to setup however many connector types and individual connections you need. Even after all that, considerable value is usually left on the table because project time-frames need to keep rolling, and so we are either forced to accept that the model will lack potentially powerful predictors, or the project begins to stall and lose momentum.

“We all know the feeling of a data driven project with amazing potential grinding to a halt, losing the spotlight, and becoming mired in development hell. Snowflake can help.”

What could a Snowflake powered Einstein Discovery project look like?

Of the many things that Snowflake does well, there are two in particular that act as major power-ups for Einstein Discovery projects.

First, Snowflake makes the management and sharing of data highly efficient, scalable and rapid. By rapid, I mean potentially instantaneous – Snowflake is the first external data analytics solution that can provide a real time query service to the CRM Analytics platform. That basically means that assets in your Snowflake data warehouse or data lake can instantly become first class citizens in the CRM Analytics studio. It’s crazy to get your head around, but basically any Snowflake asset could be considered an CRM Analytics dataset by default.

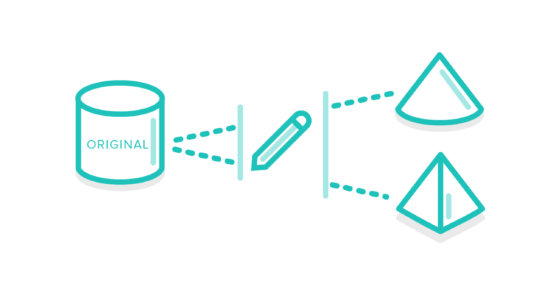

The second big power-up is the ‘virtual’ part of Virtual Data Warehouse. We can use the incredible cloning features of Snowflake to derive as many ‘copy-less’ virtual data warehouses as we might need to manipulate and aggregate (a key part of any Discovery project) the data into whatever format we want to run a model on. Not only that, but the clones effectively maintain a sync to the originals, so we can even do this in parallel to the normal daily running of the warehouse or lake and stay up to date on new data coming in.

“Snowflake provides a dramatic increase in speed and efficiency for the hardest step in any Einstein Discovery project – data sourcing and preparation.”

I cannot wait to implement an Einstein Discovery project in a business where marketing data, web analytics, app usage stats, sales history, case history, activity history, CSAT, NPS, geo-data, Customer 360 and any other structured or semi-structured data sources are blended together through the lightning fast and secure combination of Salesforce CRM and Snowflake. We think this type of environment is what can be pioneered right now because of the deep partnership between Snowflake and Salesforce and our team is ready and willing to explore how much faster you can get to value with a rig like that

Let’s make it real. What’s possible in your business?

So now we have some solid aspirational ideas about powerful applications for the combination of Snowflake and Einstein Discovery, but what I’d really like to find out is what you think. What kind of predictions and use cases become possible in your business when you know for certain that you can get any data of any volume prepped for use in Einstein Discovery in hours or days rather than weeks or months? Comment below or send us a message, we’d love to talk to you about the possibilities!